The road doesn’t care if your driver is a person or a processor. Accidents don’t stop to ask who was behind the wheel—human or hardware. As more cities watch the rise of both rideshare and self-driving vehicles, the crash stats are starting to get louder. Numbers don’t lie. Neither do headlines.

Key Points:

- Accidents linked to rideshare and autonomous cars keep rising

- Legal blame often sits in a gray area

- Many cities lack updated laws for new driving models

- Public safety measures lag behind tech growth

- Liability shifts are making lawsuits more complex

Numbers That Should Rattle Everyone

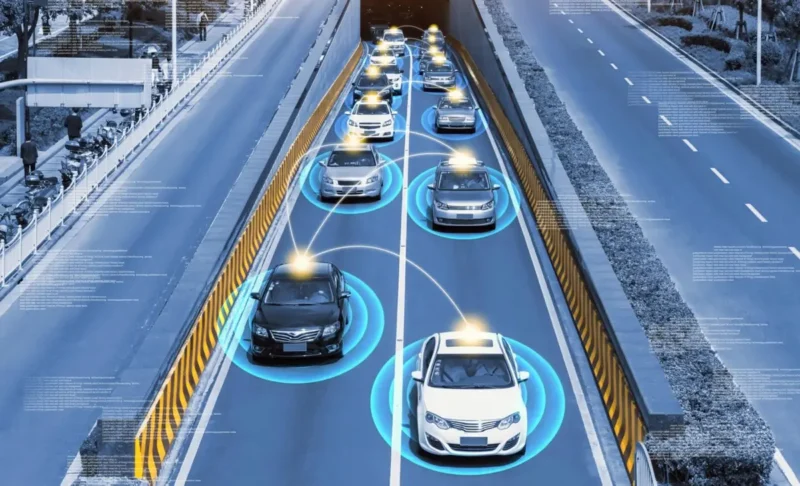

Between 2020 and 2023, traffic incidents tied to companies like Uber, Lyft, and driverless platforms such as Waymo climbed steadily in major cities. In San Francisco, autonomous vehicles stalled traffic over 60 times in just one month. Not fender-benders—actual standstill events that blocked buses and emergency services. Those numbers tell a bigger story than headlines ever could.

Insurance firms have reported over a 35% spike in claims involving app-based drivers. Speed bonuses and constant pings from multiple platforms push many drivers toward dangerous behavior. Some even admit to skipping sleep to boost ride counts.

Then there’s the autonomous problem. Waymo and Cruise keep hitting the news for cars that freeze mid-intersection or drive into construction zones. One minor tech error in a driverless car can create gridlock. Or worse.

Not Your Standard Fender-Bender

Traditional crashes often mean two drivers, two insurance cards, and maybe a traffic cop. That changes with rideshare tags or autonomous vehicles involved. Everything gets murky. Legal responsibility spreads across more layers than a lasagna.

Here’s where things get tangled:

- Was the app active during the incident?

- Did the driver work under Uber or Lyft policy at the time?

- Was the car operating under autonomous mode?

- Did a human supervisor override any decisions?

Each question turns into a legal maze. One missing answer can flip a case.

The Laws Are Trying Keep Up

State lines don’t just separate geography. They separate liability logic. California might call an Uber driver an independent contractor. New York might treat that same role as employment. And neither has clear rules for a driverless car in solo operation.

Federal lawmakers have barely scratched the surface on regulating fully autonomous fleets. Instead, they toss the hot potato to local governments. That leads to confusion, lawsuits, and policy gaps.

Companies move faster than legislation. So tech hits the road before legal systems can catch up.

Personal injury law firms like Los Angeles based Omega Law Group have seen an increase in rideshare and self-driving car accidents over the past year.

The firm reports a sharp uptick in cases involving poorly trained rideshare drivers and unexpected malfunctions in autonomous systems. Injured riders find themselves stuck in a bureaucratic tug-of-war. One missed clause in the terms of service can leave victims with unpaid bills and no compensation.

The Blame Game

Liability is no longer clear-cut. It jumps across entities like a hot wire. When a crash involves rideshare or autonomous features, the following list becomes relevant:

- Was the driver distracted?

- Did the app pressure quicker pickups?

- Did the software fail?

- Were roads poorly marked or managed?

Developers claim beta testing. Companies blame drivers. Drivers blame apps. Victims suffer delays while courts untangle the mess.

Safety Features Don’t Mean Safe Roads

Modern vehicles come packed with sensors, cameras, and lane assist. That doesn’t stop real-life issues. Pedestrians, potholes, and unpredictable city traffic still confuse even the best onboard systems.

Many drivers for platforms like Uber and Lyft admit to juggling tasks mid-ride. Some deal with backseat complaints, phone alerts, and GPS reroutes—all in real time.

That leads to trouble, especially in tight urban areas. A single alert missed during app use can lead to injury or worse. Cars may be smart, but human attention still matters.

Policy Patchwork Creates Chaos

Local policies shape how technology meets the road. Some cities issue endless permits for autonomous testing. Others ban it outright. The result? A national patchwork that offers no real standard.

- San Francisco allows full operation of driverless cars at night.

- Austin limits them near school zones.

- Chicago demands mandatory safety backups.

This inconsistency means victims get different outcomes based on where the crash happens. That creates injustice—and confusion.

Insurance Gets Complicated

Insurance plans for rideshare and autonomous vehicles read like puzzle books. Terms shift depending on whether the app is active or idle, if the driver is logged in, or if the car was self-driving.

Key concerns:

- Does coverage apply between rides?

- Who pays when an autonomous feature causes a crash?

- Are passengers covered fully during shared rides?

Some insurers deny claims unless every detail lines up. That leaves people without support when they need it most.

Public Trust Is Fading

Consumer surveys don’t lie. Confidence in autonomous cars dropped over the past two years. Trust in rideshare apps dipped as well, especially in cities with multiple accidents tied to Uber and Lyft.

What bothers users?

- Vague emergency protocols

- Limited accountability post-incident

- App glitches during dangerous moments

People want clear answers and faster help. Many don’t get either. That drives frustration—and lost customers.

What Can Be Done?

Not everything depends on future tech. Many solutions already exist but need proper enforcement.

- Create one national law for platform liability

- Require stronger training for app-based drivers

- Mandate clearer disclosures for autonomous operations

- Add public access to accident reports

- Set hard caps on rides per driver each day

Rules matter. So do consequences. Stronger oversight can reduce chaos.

Cities Must Lead, Not React

Local governments hold more power than they think. They write permits, approve test zones, and shape infrastructure. They can force companies like Waymo, Uber, and Lyft to follow higher safety standards.

Suggestions for cities:

- Schedule monthly audits on autonomous fleets

- Launch city-funded legal clinics for crash victims

- Penalize platforms that delay claims processing

Policy isn’t about waiting. It’s about acting before damage escalates.

What Victims Should Know

If injured in a rideshare or driverless incident, victims must act fast. Delays hurt claims. So does confusion about coverage.

Here’s what matters most:

- Document the event fully

- Seek medical care immediately

- Contact a lawyer with tech crash experience

Platforms don’t always help victims. Knowing your rights matters.

Legal Firms Are Evolving Too

Top law firms now train staff in tech systems. They hire coders, accident modelers, and software experts to assist in traffic litigation.

The best firms decode app logs and software outputs. That skill changes case outcomes.

Legal teams must now argue both physical impact and code logic. That shift requires sharper knowledge and deeper resources.

It Won’t Slow Down Anytime Soon

Uber keeps expanding into new cities. Lyft adds new ride types. Waymo and other autonomous players push further without slowing. Growth won’t stop just because crash numbers rise.

Without updated rules, each expansion brings new risk.

That risk hits roads, people, and legal systems—fast.

Final Word

Accountability must grow as fast as tech does. Right now, the gap grows wider by the day. Cities stall. Platforms dodge. Victims wait.

Lawmakers must set lines that protect real people. Every accident should trigger action, not silence.

The road doesn’t forgive. So laws must prevent, not just react. Better rules mean fewer victims. That’s the goal.

Related Posts:

- What Does a Family Lawyer Actually Do? (It’s More…

- Are DLL Files Dangerous? Identifying Safe vs. Malicious DLLs

- Why Truck Accidents Are So Dangerous (And How to…

- Signs Your Teen is Anxious (And Not Just Being Dramatic)

- Why Learning Outside of School Is Just as Important

- How Can You Make Cross-Browser Testing More Efficient?