Data centers are like the unsung heroes of our digital age, tirelessly working behind the scenes. Imagine the vast amount of power they need to keep our online world spinning smoothly.

It’s staggering to think that these hubs of technology consume about three percent of the globe’s electricity. And with the rise of even more powerful hyperscale facilities, their thirst for energy is only set to grow, even as we make strides in efficiency.

If you’re considering colocation, it’s crucial to get familiar with the power and cooling dynamics of these data centers. Why?

Because it directly influences your costs and how you’ll scale up in the future. The good news is, once you dive in, these aspects are pretty straightforward to grasp.

Data Center Power Demands

Assessing power requirements is one of the first tasks any organization must undertake when it decides to move assets into a data center. The power demands of equipment usually make up a sizable portion of colocation costs, and deploying powerful servers in high-density cabinets will be more expensive than a comparable number of less-impressive units.

Regardless of the type of servers being used, they will also need power distribution units (PDUs) able to handle the amount of amperage they’re pulling while in use. A data center’s electrical system should incorporate some level of redundancy that includes uninterrupted power supply (UPS) battery systems and a backup generator that can provide enough megawatts of power to keep the facility running if the main power is disrupted for any length of time.

Should the power ever go out, the UPS systems will keep all computing equipment up and running long enough for the generator to come online. In many cases, data center power infrastructure incorporates more than one electrical feed running into the facility, which provides additional redundancy.

Colocation facilities also have clearly defined power specifications that indicate how much power they can supply to each cabinet. For high-density deployments, colocation customers need to find a data center infrastructure able to provide between 10 to 20 kW of power per cabinet.

While a company with much lower power needs might not be concerned with these limits initially, they should always keep in mind that their power requirements could increase over time as they grow. Scaling operations within a data center environment with the power design to accommodate them is often preferable to the hassle of migrating to an entirely different facility.

How are Data Centers Cooled?

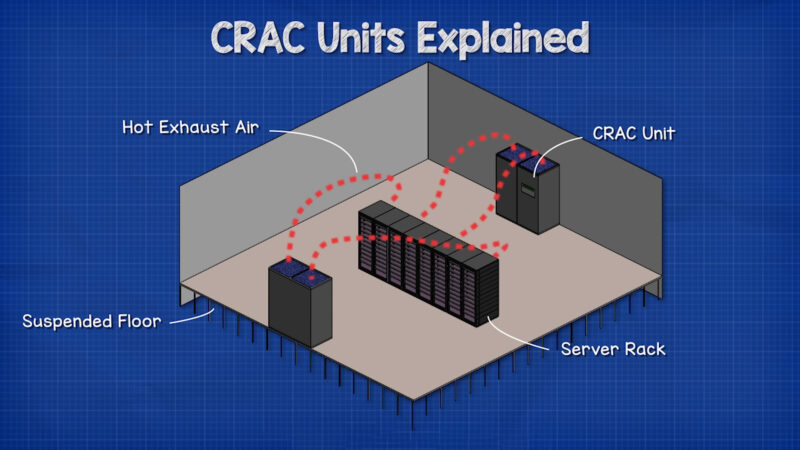

Traditional data center cooling techniques use a combination of raised floors and computer room air conditioner (CRAC) or computer room air handler (CRAH) infrastructure. The CRAC/CRAH units would pressurize the space below the raised floor and push cold air through the perforated tiles and into the server intakes.

Once the cold air passed over the server’s components and vented out as hot exhaust, that air would be returned to the CRAC/CRAH for cooling. Most data centers would set the CRAC/CRAH unit’s return temperature as the main control point for the entire data floor environment.

The problem with this deployment is that it’s inefficient and lacks any fine-tuned control. Cold air simply vented into the server room.

While this worked well enough for small, low-density deployments with low power requirements, it worked less well for larger, higher-density data floors. That’s why most facilities adopted hot aisle and cold aisle containment strategies to physically separate the cool air intended for the server’s intakes from the hot air being expelled by its exhaust vents.

Preventing this air from mixing results in more consistent temperatures and maximizes efficiency by ensuring that cold air remains cold and hot air is directed to the air handlers without raising the temperature of the ambient environment. Modern data centers use a variety of innovative data center cooling technologies to maintain ideal and efficient operating conditions.

These solutions run the gamut from basic fans to much more complex heat transfer technologies. Some of them even rely upon external sources of cold air or water to facilitate much more energy-efficient data center cooling.

What is the Difference Between CRAC and CRAH Units?

A computer room air conditioner (CRAC) unit isn’t all that different from a conventional AC unit that uses a compressor to keep refrigerant cold. They operate by blowing air over a cooling coil that’s filled with refrigerant. Relatively inefficient, they generally run at a constant level and don’t allow for precision cooling controls.

Computer room air handlers (CRAHs), on the other hand, use a chilled water plant that supplies the cooling coil with cold water. Air is cooled when it passes over this coil.

While the basic principle is similar to a CRAC unit, the big difference is the absence of a compressor, which means the CRAH consumes much less energy overall.

What is the Ideal Temperature for a Data Center?

According to the American Society of Heating, Refrigerating, and Air Conditioning Engineers (ASHRAE), the average temperature for server inlets (that is, the air that’s being drawn into the server to cool the interior components) should be between 18 and 27 degrees Celsius (or 64.4 to 80.6 degrees Fahrenheit) with a relative humidity between 20 to 80 percent. This is quite a large band, however, and the Uptime Institute advises an upper limit of 25 degrees Celsius (77 degrees Fahrenheit).

Keep in mind that this is the recommended temperature for the actual server inlets, not the entire server room. The air around the servers is going to be warmer simply due to the dynamics of heat transfer.

High-density servers, in particular, generate a tremendous amount of heat. That’s why most server rooms tend to be much colder than the above limits, usually around 19 or 21 degrees Celsius (66 to 70 degrees Fahrenheit).

It’s worth noting that hyperscale data centers run by large companies like Google often run at higher temperatures, closer to the 27 degrees Celsius (80 degrees Fahrenheit) mark. That’s because they run on an “expected failure” model that anticipates servers will fail on a regular basis and has put software backups in place to route around failed equipment.

For a large enterprise, the cost of replacing failed servers more frequently than normal may actually be less expensive over time than the cost of operating a hyperscale facility at lower temperatures. This is rarely true for smaller organizations, however.

Data Center Cooling Technology

While the power requirements of colocated equipment are a major factor in colocation costs, a data center’s cooling solutions are significant as well. The high costs of cooling infrastructure are often one of the leading reasons why companies abandon on-premises data solutions in favor of colocation services.

Private data centers are often quite inefficient when it comes to their cooling systems. They also usually lack the site monitoring capabilities of colocation facilities, which makes it more difficult for them to fully optimize their infrastructure to reduce cooling demands.

With power densities increasing rapidly, many companies are investing heavily in new data center cooling technologies to ensure that they’ll be able to harness the computing power of the next generation of processors. Larger tech companies like Google are even leveraging the power of artificial intelligence to improve cooling efficiency.

Previously farfetched solutions like liquid server cooling systems are quickly becoming commonplace as companies experiment with innovative ways to cool a new generation of high-performance processors. One of the most important innovations in data center infrastructure management in recent years has been the application of predictive analytics.

Today’s data centers generate massive amounts of information about their power and cooling demands. The most efficient facilities have harnessed that data to model trends and usage patterns, allowing them to better manage their data center power and cooling needs.

Exciting new business intelligence tools like vXchnge’s award-winning on-site platform even allow colocation customers to monitor network and server performance in real time. By cycling servers down during low-traffic periods and anticipating when power and cooling needs will be highest, data centers have been able to significantly improve their efficiency scores.

Data Center Cooling Technologies Defined

Given the importance of data center cooling infrastructure, it’s worth taking a moment to examine some commonly used and new data center cooling technologies.

Calibrated Vectored Cooling (CVC)

A form of data center cooling technology designed specifically for high-density servers. It optimizes the airflow path through equipment to allow the cooling system to manage heat more effectively, making it possible to increase the ratio of circuit boards per server chassis and utilize fewer fans.

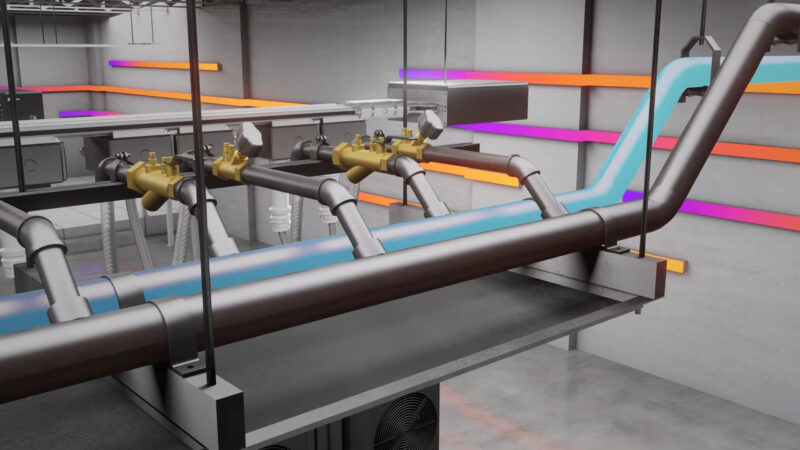

Chilled Water System

A data center cooling system commonly used in mid-to-large-sized data centers that use chilled water to cool air being brought in by air handlers (CRAHs). Water is supplied by a chiller plant located somewhere in the facility.

Cold Aisle/Hot Aisle Design

A common form of data center server rack deployment that uses alternating rows of “cold aisles” and “hot aisles.” The cold aisles feature cold air intakes on the front of the racks, while the hot aisles consist of hot air exhausts on the back of the racks.

Hot aisles expel hot air into the air conditioning intakes to be chilled and then vented into the cold aisles. Empty racks are filled by blanking panels to prevent overheating or wasted cold air.

Computer Room Air Conditioner (CRAC)

One of the most common features of any data center, CRAC units are very similar to conventional air conditioners powered by a compressor that draws air across a refrigerant-filled cooling unit. They are quite inefficient in terms of energy usage, but the equipment itself is relatively inexpensive.

Computer Room Air Handler (CRAH)

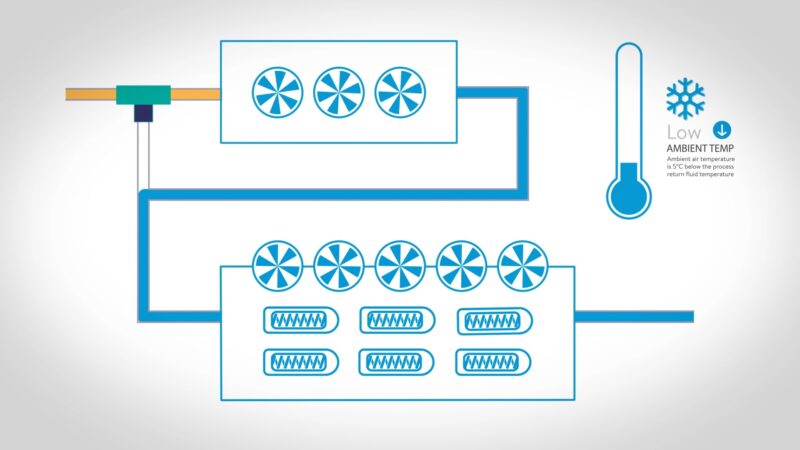

A CRAH unit functions as part of a broader system involving a chilled water plant (or chiller) somewhere in the facility. Chilled water flows through a cooling coil inside the unit, which then uses modulating fans to draw air from outside the facility.

Because they function by chilling outside air, CRAH units are much more efficient when used in locations with colder annual temperatures.

Critical Cooling Load

This measurement represents the total usable cooling capacity (usually expressed in watts of power) on the data center floor for the purposes of cooling servers.

Direct-to-Chip Cooling

A data center liquid cooling method that uses pipes to deliver coolant directly to a cold plate that is incorporated into a motherboard’s processors to disperse heat. Extracted heat is fed into a chilled-water loop and carried away to a facility’s chiller plant.

Since this system cools processors directly, it’s one of the most effective forms of server cooling systems.

Free Cooling

Any data center cooling system that uses the outside atmosphere to introduce cooler air into the servers rather than continually chilling the same air. While this can only be implemented in certain climates, it’s a very energy-efficient form of server cooling system.

Evaporative Cooling

Manages temperature by exposing hot air to water, which causes the water to evaporate and draw the heat out of the air. The water can be introduced either in the form of a misting system or a wet material such as a filter or mat.

While this system is very energy efficient since it doesn’t use CRAC or CRAH units, it does require a lot of water. Data center cooling towers are often used to facilitate evaporations and transfer excess heat to the outside atmosphere.

Immersion System

An innovative new data center liquid cooling solution that submerges hardware into a bath of non-conductive, non-flammable dielectric fluid.

Liquid Cooling

Any cooling technology that uses liquid to evacuate heat from the air. Increasingly, data center liquid cooling refers to specifically direct cooling solutions that expose server components (such as processors) to liquid to cool them more efficiently.

Raised Floor

A frame that lifts the data center floor above the building’s concrete slab floor. The space between the two is used for water-cooling pipes or increased airflow.

While power and network cables are sometimes run through this space as well, newer data center cooling designs and best practices place these cables overhead.

Data Center Cooling Market Growth Projections

Recent market analyses indicate an active and ongoing interest in the data center cooling market. Projections from ResearchandMarkets.com suggest it will have a three percent combined annual growth rate (CAGR) between 2020 and 2025.

Moreover, different but related findings from the Market Study Report published in December 2017 specifically focus on new data center cooling technologies and the market worth associated with them. The study indicates even more substantial growth related to the technology aspect but looks at the likely increase in value through 2024, not 2023 as the previous research did.

It reveals a projected CAGR of 12 percent and a total worth of $20 billion. One of the reasons cited for the uptick in the general data center cooling market is the trend of data centers built in developing countries or regions such as Singapore and Latin America.

Analysts believe as data centers start operating in those places, there will be a continual emphasis on running the facilities as efficiently as possible. That reality naturally spurs the likelihood that data center owners and managers will look for innovative options, thereby increasing the worth of innovative new data center cooling technologies, such as liquid cooling.

Data Centers and Liquid Cooling Technology

Although air cooling technology has gone through many improvements over the years, it is still limited by a number of fundamental problems. Aside from the high energy costs, air conditioning systems take up a lot of valuable space, introduce moisture into sealed environments, and are notorious for mechanical failures.

Until recently, however, data centers had no other options for meeting their cooling needs. With new developments in liquid cooling, many data centers are beginning to experiment with new methods for solving their ongoing heat problems.

What is Liquid Cooling?

While early incarnations of liquid cooling systems were complicated, messy, and very expensive, the latest generation of the technology provides a more efficient and effective cooling solution. Unlike air cooling, which requires a great deal of power and introduces both pollutants and condensation into the data center, liquid cooling is cleaner more targeted, and scalable.

The two most common cooling designs are full immersion cooling and direct-to-chip cooling. Immersion systems involve submerging the hardware itself into a bath of non-conductive, non-flammable dielectric fluid.

Both the fluid and the hardware are contained inside a leak-proof case. Dielectric fluid absorbs heat far more efficiently than air, and as heated water turns to vapor, it condenses and falls back into the fluid to aid in cooling.

Direct-to-chip cooling uses pipes that deliver liquid coolant directly into a cold plate that sits atop a motherboard’s processors to draw off heat. The extracted heat is then fed into a chilled-water loop to be transported back to the facility’s cooling plant and expelled into the outside atmosphere.

Both methods offer far more efficient cooling solutions for power-hungry data center deployments.

AI Energy Demands

Efficiency will be a key concern for data centers in the future. A new generation of processors capable of running powerful machine learning artificial intelligence and analytics programs bring with them massive energy demands and generate huge amounts of heat.

From Google’s custom-built Tensor Processing Units (TPUs) to high-performance CPUs accelerated by graphics processing unit (GPU) accelerators, the processing muscle that’s powering modern AI is already straining the power and cooling capacity of data center infrastructure. With more and more organizations implementing machine learning and even offering AI solutions as a service, these demands will surely increase.

Google found this out the hard way when the company introduced its TPU 3.0 processors. While air-cooling technology was sufficient to cool the first two generations, the latest iteration generated far too much heat to be cooled efficiently with anything short of direct-to-chip liquid cooling.

Fortunately, the tech giant had been researching viable liquid cooling solutions for several years and was able to integrate the new system relatively quickly.

High-Density Server Demands

Even for data center infrastructures that aren’t facilitating AI-driven machine learning, server rack densities, and storage densities are increasing rapidly. As workloads continue to grow and providers look at replacing older, less efficient data center cooling systems, they will have more of an incentive to consider liquid cooling solutions because it won’t mean maintaining two separate systems.

Data centers are currently engaged in an arms race to increase rack density to provide more robust and comprehensive services to their clients. From smaller, modular facilities to massive hyperscale behemoths, efficiency and performance go hand in hand as each generation of servers enables them to do more with less.

If the power demands of these deployments continue to grow in the coming years, inefficient air cooling technology will no longer be a viable solution. For many years, liquid cooling could not be used for storage drives because older hard disk drives (HDDs) utilized moving internal parts that could not be exposed to liquid and could not be sealed.

With the rapid proliferation of solid-state drives (SSDs), the development of sealed HDDs filled with helium, and innovative new storage technology like 5D memory crystals, immersion-based cooling solutions have become far more practical and reliable.

Edge Computing Deployments

Liquid cooling systems can deliver comparable cooling performance to air-based systems with a much smaller footprint. This could potentially make them an ideal solution for edge data centers, which are typically smaller and will likely house more high-density hardware in the future.

Designing these facilities from the ground up with liquid cooling solutions will allow them to pack more computing power in a smaller space, lending them the versatility companies are coming to expect from edge data centers. While liquid cooling probably won’t replace air conditioning systems in every data center anytime soon, it is becoming an increasingly attractive solution for many facilities.

For data centers with extremely high-density deployments or providing powerful machine learning services, liquid cooling could allow them to continue to expand their services thanks to their ability to deliver more effective and efficient cooling.

6 Big Mistakes in Data Center Cooling

Since efficiency is so critical to controlling data center cooling costs and delivering consistent uptime service, here are six big mistakes data center managers need to avoid.

1. Bad Cabinet Layout

A good cabinet layout should include a hot-aisle and cold-aisle data center cooling design with your computer room air handlers at the end of each row. Using an island configuration without a well-designed orientation is very inefficient.

2. Empty Cabinets

Empty cabinets can skew airflow allowing hot exhaust air to leak back into your cold aisle. If you have empty cabinets, make sure your cold air is contained.

3. Empty Spaces Between Equipment

How many times have you seen cabinets with empty, uncovered spaces between the hardware? These empty spaces can ruin your airflow management. If the spaces in the cabinet are not sealed, hot air can leak back into your cold aisle. A conscientious operator will make sure the spaces are sealed.

4. Raised Floor Leaks

Raised floor leaks occur when cold air leaks under your raised floor and into support columns or adjacent spaces. These leaks can cause a loss of pressure, which can allow dust, humidity, and warm air to enter your cold aisle environment. In order to resolve phantom leaks, someone will need to do a full inspection of the support columns and perimeter and seal any leaks they find.

5. Leaks Around Cable Openings

There are many openings in floors and cabinets for cable management. While they are under the raised floor inspecting for leaks, they should also look for unsealed cable openings, holes under remote power panels, and power distribution units. If left open, these holes can let cold air escape.

6. Multiple Air Handlers Fighting to Control Humidity

What happens when one air handler tries to dehumidify the air while another unit tries to humidify the same air? The result can be a lot of wasted energy while the two units fight for control. By thoroughly planning your humidity control points, you can reduce the risk of this occurring.

4 Keys to an Optimal Data Center Cooling System

For most businesses, the first step in optimizing their data center cooling system is understanding how data center cooling works – or, more importantly, how data center cooling should work for their specific business and technical requirements.

Here are four best practices for ensuring optimal data center cooling:

1. Use Hot Aisle/Cold Aisle Design

This data center cooling design lines server racks in alternating rows to create “hot aisles” (consisting of the hot air exhaust on the back of the racks) and “cold aisles” (consisting of cold air intakes on the front of the racks). The idea is for the hot aisle to expel hot air into the air conditioning intakes where it is chilled and pushed through the air conditioning vent to be recirculated into the cold aisle.

2. Implement Containment Measures

Since air has a stubborn tendency to move wherever it wants, modern data centers implement further containment measures by installing walls and doors to direct airflow, keeping the cold air in the cold aisles and hot air in the hot aisles. Efficient containment allows data centers to run higher rack densities while reducing energy consumption.

3. Inspect and Seal Leaks in Perimeters, Support Columns, and Cable Openings

Water damage presents a huge problem to data centers and is the second leading cause of data loss and downtime behind electrical fires. Since water damage is often not covered by business insurance policies (and even when it is, there’s no way to replace lost data), data centers cannot afford to ignore the threat.

Fortunately, most leaks are easily detected and preventable with a bit of forethought and caution. Tools such as fluid and chemical sensing cables, zone controllers, and humidity sensors can spot leaks before they become a serious problem.

4. Synchronize Humidity Control Points

Many data centers utilize air-side economizer systems, or “free air-side cooling.” These systems introduce outside air into the data center to improve energy efficiency; unfortunately, they can also allow moisture inside.

Too much moisture in the air can lead to condensation, which will eventually corrode and shorten electrical systems. Adjusting the climate controls to reduce moisture may seem like a solution, but that can lead to problems as well.

If the air becomes too dry, static electricity can build up, which can also cause equipment damage. It’s imperative, therefore, that a data center’s humidity controls account for moisture coming in with the outside air to maintain an ideal environment for the server rooms.

Getting the Most Out of Data Center Cooling

As power demands continue to increase, new data center cooling technologies will be needed to keep facilities operating at peak capacity. Evaluating a data center’s power and cooling capabilities is critical for colocation customers.

By identifying facilities with a solid data center infrastructure in place that can drive efficiencies and improve performance, colocation customers can make better long-term decisions about their own infrastructure. Given the difficulties associated with migrating assets and data, finding a data center partner with the power and cooling capacity to accommodate both present and future needs can provide a strategic advantage for a growing organization.

FAQ

Why is cooling so important for data centers?

Cooling ensures that data center equipment operates efficiently and prevents overheating which can lead to equipment failure and data loss.

Are there any environmental concerns with data center cooling?

Yes, traditional cooling methods can consume large amounts of water and energy. However, newer cooling technologies are focusing on sustainability and reduced environmental impact.

How do data centers handle power outages?

Most data centers have redundant power supplies, including UPS (uninterruptible power supply) systems and backup generators, to ensure continuous operation even during power disruptions.

What are the environmental benefits of liquid cooling over traditional air cooling?

Liquid cooling is generally more energy-efficient, can reduce water usage, and can allow for more efficient heat reuse.

How often should data centers review their cooling strategies?

Given the rapid advancements in technology and increasing power demands, data centers should regularly review and update their cooling strategies, at least annually.

Are there any safety concerns with liquid cooling?

While liquid cooling involves liquids, the coolants used are typically non-conductive and non-flammable, minimizing risks. However, regular maintenance and checks are essential to prevent leaks.

Final Words

In the digital age, data centers stand as the backbone of our interconnected world. Their role is pivotal, not just in storing and managing data, but in ensuring the seamless operation of countless online services we rely on daily.

As we’ve delved into, the power and cooling dynamics of these centers are paramount to their efficiency, cost-effectiveness, and longevity. As technology evolves, so too must our strategies for powering and cooling these behemoths.

Liquid cooling, AI-driven solutions, and predictive analytics are just a few of the innovations shaping the future of data center operations. For businesses and organizations, understanding these dynamics is not just about cost-saving; it’s about future-proofing their digital assets.

As we move forward, the marriage of technology and sustainability in data centers will be crucial, ensuring that as our digital demands grow, we do so responsibly and efficiently.

Related Posts:

- Data Center Design 101: Everything You Need to Know

- Data Center Networking 101: Everything You Need to Know

- 5 Things You Need to Know About Data Center Infrastructure

- Data Center Server Racks and Cabinets: What You Need to Know

- What You Need to Know About Data Center Power Management

- Top 5 Next Generation Data Center Trends You Need to…