Enabling all persons to readily access one’s website proves precedence for any enterprise aiming to connect with patrons employing their favored devices.

Whether browsing Chrome, Firefox, or Safari, whether on desktop or mobile, individuals anticipate a serene, direct experience when perusing a site.

Yet, attaining comparability amidst the extensive possibilities of browsers and platforms presents no simple undertaking.

Comparability assessment offers a moment to find wherever the user knowledge may be troubled and to ease inconsistencies just before they touch real lives. Allow us to discuss this all the more carefully.

Let us talk about it in greater detail.

Cross-Browser Testing

Cross-browser testing is the process of evaluating a website’s performance and appearance across various web browsers. The primary goal is to identify and address three key areas:

Visual Anomalies:

- Misaligned elements on the page

- Incorrect color rendering

- Broken or distorted layouts

Functionality Problems

- Links that do not work as intended

- Buttons or controls that are unresponsive

- Forms that fail to submit or process data correctly

Compatibility Issues

- Ensuring the website is accessible to users with disabilities

- Complying with accessibility standards like the Web Content Accessibility Guidelines (WCAG)

Through cross-browser testing, developers can ensure a consistent and seamless user experience for every visitor, regardless of the browser or assistive technology they use. If you want to learn more about it, visit https://www.lambdatest.com/learning-hub/cross-device-testing.

As you can see, this is an important process for a variety of reasons. One of the most important factors that requires your attention is the user interface.

Why is it Important?

Ensuring compatibility across all browsers expands a website’s reach by enabling users to employ diverse browsers and devices to access the content.

Providing a consistent experience across all platforms enhances user satisfaction and engagement levels. Users appreciate websites that allow them to efficiently find what they need regardless of how they access the internet.

Neglecting cross-browser testing can unfortunately lead to user frustration, driving customers away and potentially undermining a business’s reputation. Nobody wants to waste time debugging browser quirks when they visit a site.

Websites that function improperly on certain browsers risk losing the trust and credibility of customers. People rightfully expect sites to work seamlessly regardless of their browsing preferences.

Steps to Efficient Cross-Browser Testing

Now, let us take a look at the steps to having efficient testing.

Step 1: Perform Code Validation

Conforming to World Wide Web Consortium guidelines helps make certain a website’s code remains well-structured, efficient, and compatible across multiple browsing platforms.

Resources like the W3C Markup Validation Service, CSS Validation Service, and HTML Tidy can help detect and correct code problems, strengthening the website’s functionality and search engine optimization performance.

Through code validation, developers can sidestep frequent missteps that tend to bring about compatibility troubles and help provide a seamless user experience regardless of the browser employed.

Adhering to standards established by the W3C helps all users fully access the content, avoiding frustration. With validation, designers can quickly remedy any coding issues before they inconvenience customers.

Step 2: Build a Browser Matrix

Developing a browser directory involves cataloging the target browsing platforms and their variations necessitating evaluation.

The catalog should be based upon analytical details illustrating the audience’s browser utilization habits, making certain the most applicable browsers are addressed.

Frequent improvements to the browser directory are fundamental for keeping pace with new browser versions introduced and shifting utilization tendencies.

This strategy helps to prioritize work, ensuring that the most essential browsers for the user population undergo comprehensive testing.

A properly maintained browser directory becomes a prudent tool supporting efficient cross-browser testing by highlighting where efforts could streamline the user experience across diverse browsing options.

With an updated directory, designers can focus their debugging skills most beneficially.

Step 3: Always Verify Feature Support

Not all browsers enable the same web capabilities. It is imperative to check attribute compatibility across target browsers to prevent functionality complications.

Resources like Can I Use furnish insight into attribute support across disparate browsers.

Avoiding outdated capabilities and utilizing modern, commonly sanctioned technologies can prevent comparability issues that would compromise the user experience.

Confirming feature comparability across browsers helps uphold consistent performance, strengthening the experience of all users regardless of their browsing preferences.

Step 4: Skip Unnecessary Components

Simplifying website functionality by removing extraneous components can promote more efficient cross-browser evaluation.

Focus on core capabilities that offer true value to users, jettisoning superfluous facets.

Streamlining the website can minimize the potential for trouble while making the testing process more wieldy.

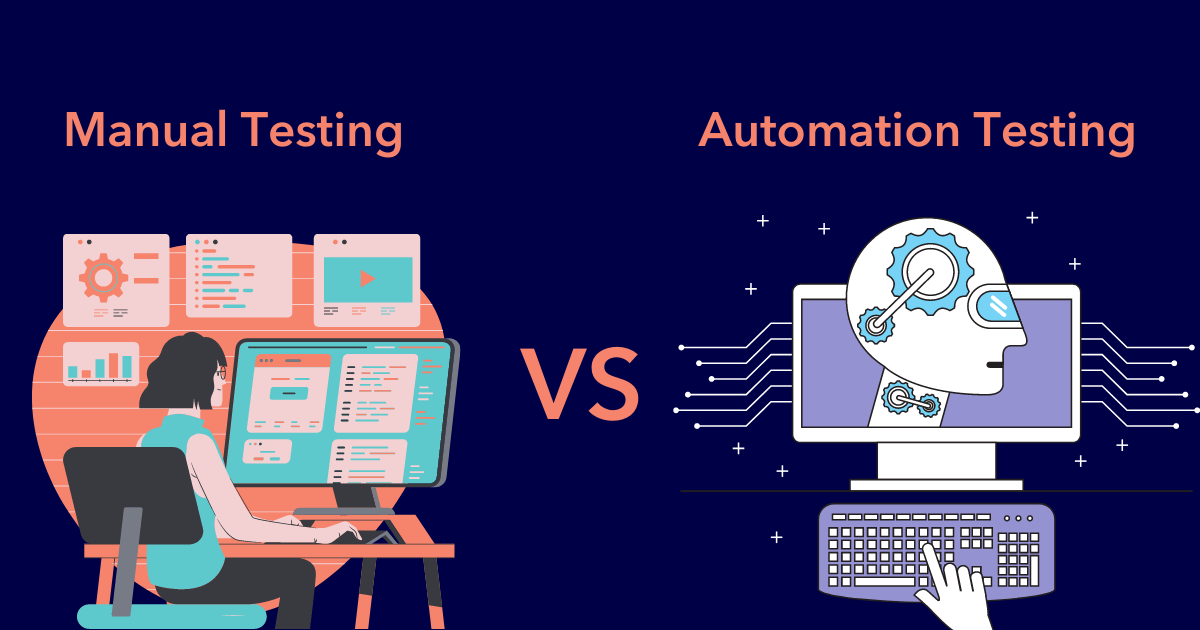

Step 5: Choose the Right Way to Test

Opting for manual or automated evaluation holds tremendous import for shaping an effective assessment strategy.

Individual assessment proves helpful for pinpointing visual and user experience challenges, while automated testing excels at repetitive duties and comprehensive coverage.

Employing online resources like BrowserStack or Sauce Labs can yield a balanced methodology, amalgamating manual and automated assessments’ respective strengths.

Emulators and genuine devices each offer particular advantages, and amalgamating both may help ensure exhaustive assessment. Choosing evaluative techniques discerningly helps optimize the testing process.

Step 6: Encapsulate Code in Frameworks

Drawing upon frameworks holds the potential to streamline the process of accomplishing cross-browser comparability.

Platforms like Bootstrap furnish pre-built remedies for responsive configuration and search engine optimization, helping guarantee a steady look throughout diverse browsers.

Housing coding within frameworks curtails the prospect of comparability issues while potentially expediting development.

Step 7: Implement Continuous Testing

Ongoing assessment across the development lifestyle holds great importance for sustaining cross-browser comparability.

Integrating cross-browser testing into continuous integration/continuous delivery pipelines helps guarantee that assessment happens in a timely manner, catching challenges hastily.

Commencing testing at an early phase and performing it frequently can preclude difficulties from becoming strongly inherent in the codebase, rendering them simpler and less expensive to remedy.

Consistent assessment aids in upholding a high-caliber user experience while confirming the website remains compatible with advancing browsers and technologies.

Manual vs. Automated Cross-Browser Testing

Each methodology—whether manual assessment or automated—carries particular experts and restrictions.

Hands-on evaluation remains integral to detecting appearance issues as well as evaluating the complete user experience.

It allows testers to perform exploratory assessments and pinpoint delicate challenges that pre-programmed checks may miss. However, it necessitates considerable time and lacks scalability for exhaustive testing.

Automated assessment, on the other hand, proves expedient for repetitive undertakings and large-scale evaluation. It can quickly confirm functionality throughout multiple browsers and devices.

Amalgamating both methods can furnish comprehensive coverage, with hands-on testing focusing on vital regions and automated approaches to managing routine investigations.

Tapping the particular qualifications of every technique may result in a more balanced and fruitful cross-browser testing strategy.

Related Posts:

- Can Custom Rack Widths Offer More Benefits Than…

- Security Testing in Software Engineering - Tools and…

- The Best 8 Practices for Effective Mobile App Testing

- 8 Common Visual Regression Testing Challenges and…

- Testing on Safari for Windows ─ Effective Strategies…

- Is Your Website Too Complex? How Minimalist Design…